Intro

Apache Spark is the most advanced and powerful computation engine intended for big data analytics. Beside data engine it also provides libraries for streaming (Spark Streaming), machine learning (MLlib) and graph processing (GraphX).

Historically Spark emerged as a successor of Hadoop ecosystem with a following key advantages:

- Spark provides significant performance improvement by keeping data in memory, while Hadoop relies on slow disk access

- Spark has rich programming APIs compared to restricted Hadoop’s map-reduce model

- Spark is compatible with Hadoop, so it is possible to run Spark on top of the existing Hadoop ecosystem

Zentaly as a team of experts has years of experience building Spark based big data business solutions. In this article I would like to share our knowledge with everyone who looks forward to start a new Apache Spark project.

Challenges

The content will be structured in a form of “challenge and recipe” approach to ease reading process.

Challenge #1: Do we really need Spark?

As with the most of software projects kick-offs, business might be curious why resources and money should be allocated for a particular project. While for software engineers benefits might look quite obvious, there is always extra amount of work to translate them into KPIs/OKRs.

Recipe

You should translate technical benefits to numeric values (KPIs/OKRs) in order to make business stakeholders be able to understand and measure its impact to the business.

Examples

- You are going to decrease “Shopping Card Checkout” processing time from 2 hours down to 10 minutes per user. In practice it means that users will get better response time and become less disappointed. From business perspective it means that purchase returns will decrease by 5% and number of support calls will decrease by 15%, which in total will increase revenue by 3% and decrease costs by 7% (specific numbers must be precisely measured).

- You are going to extend ad campaign targeting dimensions to improve served ad quality. Logically it will provide more suitable ads for each particular user. From business perspective it could be represented as increased CTC and CPI rates by 5%.

Challenge #2: We can do it ourself

“As people are building cars and rockets, its quite obvious that we can do it ourself”. That’s exactly what some engineers might want to say when facing a new technology stack, especially as complex as a Spark.

Recipe

Introducing a new framework like Apache Spark or any other technology requires some learning curve for engineers to understand. Learning and investing own time is the key factor to building deep understanding and improving our skills about technology.

So if you are going to start working with Spark you can find developer volunteers who might want to attend specialised trainings or prepare company internal knowledge sharing sessions by skilled employees.

More traction you will be able to build - the better understanding and higher skill set will grow inside development team.

Challenge #3: We decided to start using Spark, how can we integrate it with existing infrastructure?

While creating Spark apps is relatively simple (you can read about it in my previous article), running Spark in Production and integrating it with existing apps might be quite challenging task. The reason for this is an old idea which was born in Hadoop times - “Moving code is cheaper than moving data”. As a successor of Hadoop, Spark follows this pattern accordingly.

“Moving code” concepts assumes that big data application should be built as a standalone module and shipped to a runtime environment (usually cluster) where it will be executed and managed by that environment in a very special way. The good reason for this pattern to exist is high level of complexity which must be addressed by that environment, like following:

- Automatic code distribution and execution on thousands of physical servers

- Horizontal scalability

- High availability

As for today, Spark execution environment in a very minimalistic form could be represented with a following diagram:

The complexity of tools, platforms and its variety makes the entires system quite complex. What initially looked as a pretty simple Spark app, in the real life ends up as big standalone ecosystem.

Recipe #1

If you are big company with a lot of resources, most probably you will end up buying quite expensive solution from 3rd party vendor (e.g. Cloudera/Databricks) or even building this ecosystem yourself.

Unfortunately integrating big data stack with existing services would additionally require building middleware integration layer. Usually this middleware could be implemented as:

- Service to service communication via REST or rarely SOAP

- Service communication via Enterprise Service Bus solutions (rabbitmq, web sphere, kafka, amazon sms, etc)

- Data sharing via trivial relational database or even files on distributed filesystem storage

Overall this is the most popular approach nowadays which makes big data solutions very rarely seen in a middle size companies.

Recipe #2

If your company is not too big and amount of data you work with is limited, you have a decent option using Spark as library inside your existing microservice (works for any JVM based language and Python). In this case you can just include Spark library as dependency and start writing big data code directly inside your existing app. Though it will have following limitations:

- Data processing will be limited to a single machine where your service is running

- Scalability is still possible but only if you can partition your data in a chunks by some criteria (preferably business domain) and make each service to be responsible for a single chunk

- You might need to solve java dependency conflicts due to large number of 3rd party libraries pooled by Spark into java classpath (not a problem for a pyspark)

Combination of simplicity and pragmatism make this approach quite suitable for some projects but obviously not all.

Challenge #4: Troubleshooting Spark failures

While explicitly looking simple, Spark apps usually process terabytes of data, which causes even simple mistake to cause big impact. We can categorise all types of Spark failures into 2 main categories and review them separately.

Case #1: Application failures

This category includes all types of errors which are caused by design or development errors. Mostly they are represented by OOM (Out of Memory) errors.

Recipe

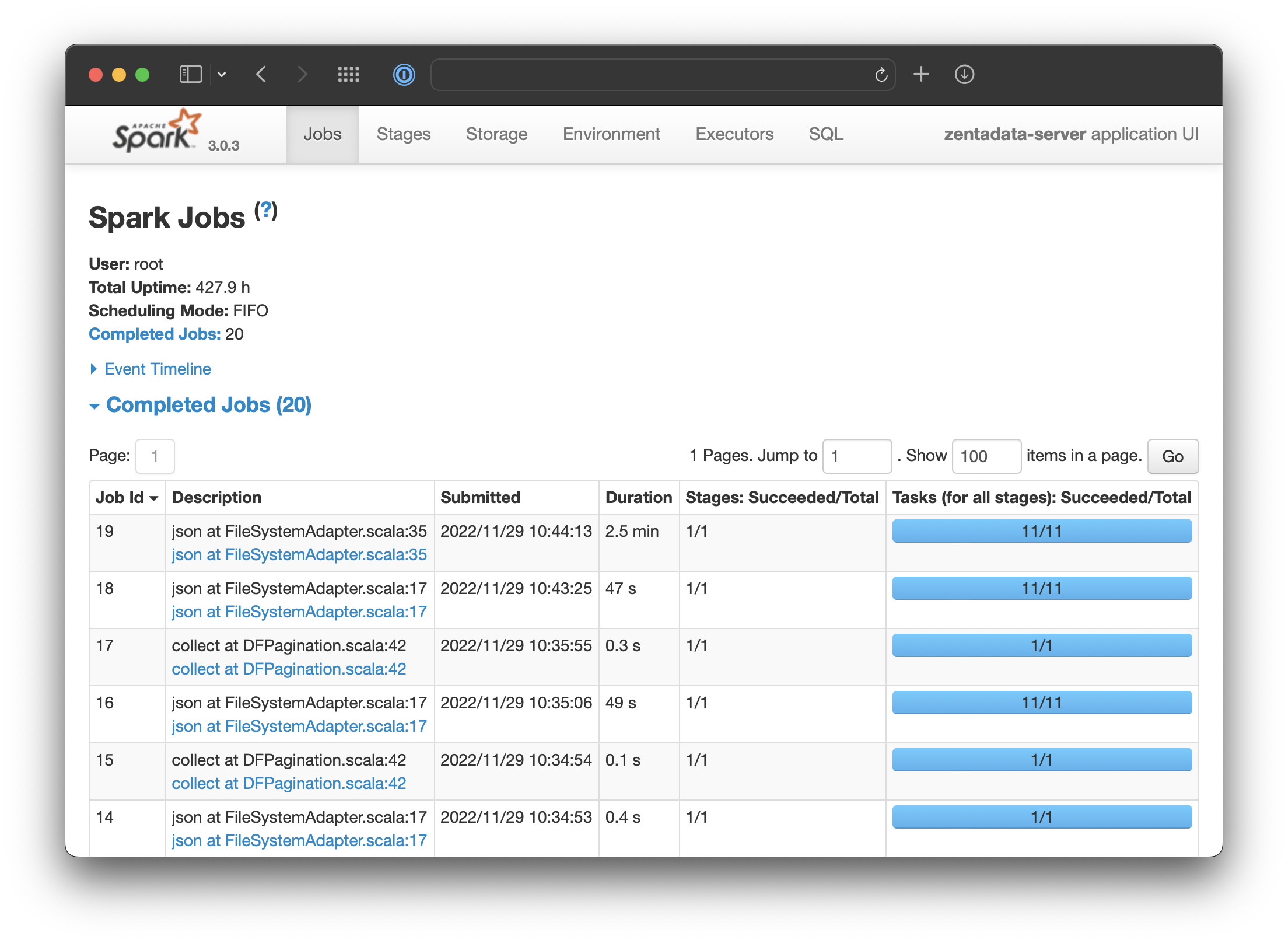

Spark provides a nice Spark UI app which helps developers to understand how data is flowing between multiple data processing steps (aka stages). You should be able to detect at which step you have got OOM and how much data was processed at that step.

By default Spark quite effectively distributes data load between cluster nodes, so you should not get memory bottleneck on a single node. Unfortunately on the Spark API level you can enforce some operations which will cause data to be aggregated on a single node, like following:

dataframe.collect()- loads all data into memory on a driver nodedataframe.groupBy()- aggregates all data by key, if key has a low variety, then entire dataframe might get into a single partition which will exceed worker memory limitdataframe.join()(left/right/outer) - invalid key during join can causes significant data multiplication. While its being purely business logic error, it will be detected as OOM which make it hard to investigate

Case #2: Environment failures

By design Spark runtime environment implemented as a cluster is deployed to multiple physical nodes (servers). Each node runs multiple java processes communicating to each other. There are following process types forming cluster topology:

- Cluster Manager - cluster wide process managing entire Spark cluster (aka Master)

- Worker - node level process responsible for app execution by spawning multiple Executors

- Driver - application level process responsible for converting your code into multiple tasks

- App Manager - application level process responsible for resource negotiation with Cluster Manager (might run in the same JVM with Driver)

- Executor - application level processes (multiple) responsible for task execution

Each process is a standalone java app containing specific configuration settings intended for its optimal execution based on provided hardware resources.

Any misconfiguration of particular process settings might make cluster feel unhealthy while running big data loads. Overall configuration list is out of scope of this article and represent quite involved knowledge by itself.

Recipe #1

Cluster configuration is a highly challenging, no surprise that specialised solutions have been created. Commercially available: Databricks, Cloudera. Open source - Apache Ambari.

Recipe #2

You can also decide to manage your cluster configuration by yourself. In this case you should expect to pass a really long journey until it will be stable enough for Production use. Depending on team expertise level it could take from 1 month to 1 year.

Challenge #5: Data security

The last but not least challenge is data security. With CCPA and GDPR regulations came to enterprise world, data security is a must nowadays. Open investigation showed that 49% of enterprises have faced data security issues in their big data projects.

Spark out of the box has no solution to secure a sensitive user’s data.

Recipe

In order to support data security, its required to take this challenge seriously right on the project kick-off. The architecture design strategy should be built around data security concept as a first class citizen. Descoping data security to a later stage might require entire system rewrite and big resource waste.

The design approach should take in account multiple data security requirements and address them accordingly:

- Data removal - how data will be removed on user request?

- Data cleanup - how system will identify and remove sensitive user information?

- Data anonymisation/pseudonymization - how user’s data will be secured without loosing ability to analyse it?

Summary

We have reviewed the most critical challenges you might face when starting new Apache Spark project. To address those challenges there were provided practical recipes proved to work from our long term experience.

Nevertheless we highly recommend to try for free our Zentadata Platform which addresses all technical challenges and provides a lot of extra features like a data security and ability to run scalable big data jobs within a standalone microservice.